How to Set Up One Instance Belonging to Multiple ELBs

I set this up using the standard EC2 API and EC2 ELB command-line tools. First I launched an instance. I used my favorite Alestic image, the Ubuntu 8.04 Hardy Server AMI:

My default security group allows access to port 80, but if yours doesn't you will need to either change it (for this experiment) or use a security group that does allow port 80. Once my instance was running I sshed in (you should use your own instance's public DNS name here):

In the ssh session, I installed the Apache 2 web server:

Then I tested it out from my local machine:

The result, showing the HTML

It works! means Apache is accessible on the instance.Next, to set up two load balancers. On my local machine I did this:

Once the load balancers were created, I added the instance to both as follows:

Output:DNS-NAME lbOne-1123463794.us-east-1.elb.amazonaws.com

Output:DNS-NAME lbTwo-108037856.us-east-1.elb.amazonaws.com

Okay, EC2 accepted that API call. Next, I tested that it actually works, directing HTTP traffic from both ELBs to that one instance:

Output:INSTANCE-ID i-0fdcda66

Output:INSTANCE-ID i-0fdcda66

Both commands produce

It works!, the same as when we accessed the instance directly. This shows that multiple ELBs can direct traffic to a single instance.What Can This Be Used For?

Here are some of the things you might consider doing with this technique.

Creating different tiers of service

Using two different ELBs that both contain (some of) the the same instance(s) can be useful to create different classes of service without using dedicated instances for the lower service class. For example, you might offer a "best-effort" service with no SLA to non-paying customers, and offer an SLA with performance guarantees to paying customers. Here are example requirements:

- Paid requests must be serviced within a certain minimum time.

- Non-paid requests must not require dedicated instances.

- Non-paid requests must not utilize more than a certain number (n) of available instances.

("Huh?" you say. "Won't Auto Scaling terminate the original n instances that were in the paying-tier ELB pool?" No. Auto Scaling does not consider instances that were already in an ELB when the Auto Scaling group was created. As soon as I find this documented I'll add the source here. In the meantime, trust me, it's true.)

Splitting traffic across multiple destination ports

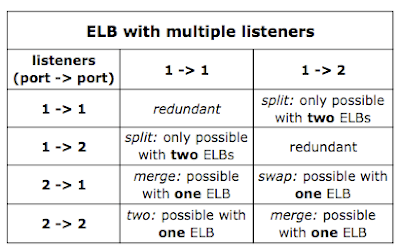

The ELB forwarding specification is called a listener. A listener is the combination of source-port, protocol type (HTTP or TCP), and the destination port: it describes what is forwarded and to where. In the above demonstration I created two ELBs that both have the same listener, forwarding HTTP/80 to port 80 on the instance. But the ELBs can also be configured to forward HTTP/80 traffic to different destination ports. Or, the the ELBs can be configured to forward traffic from different source ports to the same destination port. Or from different source ports to different destination ports. Here's a diagram depicting the various possible combinations of listeners:

As the table above shows, there are only a few combinations of listeners. Two listeners that are the same (1 -> 1, 1 -> 1 and 1-> 2, 1 -> 2) are redundant (more on this below), possibly only making sense as explained above to provide different classes of service using the same instances. Two listeners forwarding the same source port to different destination ports (1 -> 1, 1 -> 2) can only be achieved with two ELBs. Two listeners that "merge" different source ports into the same destination port (1 -> 1, 2 -> 1 and 1 -> 2, 2 -> 2) can be done with a single ELB. So can two listeners that "swap" source ports and destination ports between them (1 -> 2, 2 -> 1). So can two listeners that map different source ports to different destination ports (1 -> 1, 2 -> 2).

Did you notice it? I said "can be done with a single ELB". Did you know you can have multiple listeners on a single ELB? It's true. If we wanted to forward a number of different source ports all to the same destination port we could accomplish that without creating multiple ELBs. Instead we could create an ELB with multiple listeners. Likewise, if we wanted to forward different source ports to different destination ports we could do that with multiple listeners on a single ELB - for example, using the same ELB with two listeners to handle HTTP/80 and TCP/443 (HTTPS). Likewise, swapping source and target ports with two listeners can be done with a single ELB. What you can't do with a single ELB is split traffic from a single source port to multiple destination ports. Try it - the EC2 API will reject an attempt to build a load balancer with multiple listeners sharing the same source port:

Granted, the error message misidentifies the problem, but it will not work.

Output:elb-create-lb: Malformed input-Malformed service URL: Reason:

elasticloadbalancing.amazonaws.com - https://elasticloadbalancing.amazonaws.com

Usage:

elb-create-lb

LoadBalancerName --availability-zones value[,value...] --listener

"protocol=value, lb-port=value, instance-port=value" [ --listener

"protocol=value, lb-port=value, instance-port=value" ...]

[General Options]

For more information and a full list of options, run "elb-create-lb --help"

Where does that leave us? The only potentially useful case for using two separate ELBs for multiple ports, with the same instance(s) behind the scenes, is where you want to split traffic from a single source port across different destination ports on the instance(s). Practically speaking, this might make sense as part of implementing different classes of service using the same instances. In any case, it would require differentiating the traffic by address (to direct it to the appropriate ELB), so the fact that the actual destination is on a different port is only mildly interesting.

Redundancy - Not.

Putting the same instance behind multiple load balancers is a technique used with hardware load balancers to provide redundancy: in case one load balancer fails, the other is already in place and able to take over. However, EC2 Elastic Load Balancers are not dedicated hardware, and they may not fail independently of each other. I say "may not" because there is not much public information about how ELB is implemented, so nobody who is willing to talk knows how independent ELBs are of each other. Nevertheless, there seems to be no clear redundancy benefits from placing one EC2 instance into multiple ELBs.

With Auto Scaling - Maybe, but why?

This technique might be able to circumvent a limitation inherent in Auto Scaling groups: an instance may only belong to a single Auto Scaling group at a time. Because Auto Scaling groups can manage ELBs, you could theoretically add the same instance to both ELBs, and then create two Auto Scaling groups, one managing each ELB. Your instance will be in two Auto Scaling groups.

Unfortunately you gain nothing from this: as mentioned above, Auto Scaling groups ignore instances that already existed in an ELB when the Auto Scaling group was created. So, it appears pointless to use this technique to circumvent the Auto Scaling limitation.

[The Auto Scaling limitation makes sense: if an instance is in more than one Auto Scaling group, a scaling activity in one group could decide to terminate that shared instance. This would cause the other Auto Scaling group to launch a new instance immediately to replace the shared instance that was terminated. This "churn" is prevented by forbidding an instance from being in more than one Auto Scaling group.]

In short, the interesting feature of ELB allowing an instance to belong to more than one ELB at once has limited practical applicability, useful to implement different service classes using the same instances. If you encounter any other useful scenarios for this technique, please share them.

Would you happen to know what algorithm the ELB uses behind the scenes ? Is is round-robin or is it smarter ?

ReplyDeletethanks....

@s,

ReplyDeleteYou've come to the right place. Check out this article on my blog:

http://clouddevelopertips.blogspot.com/2009/07/elastic-in-elastic-load-balancing-elb.html