Tuesday, February 9, 2010

Blog and RSS Feed Have Moved

Hey everyone. Just to let you know I've moved this blog to www.shlomoswidler.com and the feed to FeedBurner at http://feeds.feedburner.com/CloudDeveloperTips . Feel free to check out the new locations. Same great content, new and improved look.

Sunday, January 17, 2010

Creating Consistent Snapshots of a Live Instance with XFS on a Boot-from-EBS AMI

Eric Hammond has taught us how to create consistent snapshots of EBS volumes. Amazon has allowed us to use EBS snapshots as AMIs, providing a persistent root filesystem. Wouldn't it be great if you could use both of these techniques together, to take a consistent snapshot of the root filesystem without stopping the instance? Read on for my instructions how to create an XFS-formatted boot-from-EBS AMI, allowing consistent live snapshots of the root filesystem to be created.

The technique presented below owes its success to the Canonical Ubuntu team, who created a kernel image that already contains XFS support. That's why these instructions use the official Canonical Ubuntu 9.10 Karmic Koala AMI - because it has XFS support built in. There may be other AKIs out there with XFS support built in - if so, the technique should work with them, too.

How to Do It

The general steps are as follows:

1. Run an instance and set it up the way you like.

As mentioned above, I use the official Canonical Ubuntu 9.10 Karmic Koala AMI (currently ami-1515f67c for 32-bit architecture - see the table on Alestic.com for the most current Ubuntu AMI IDs).

Now that you're in, set up the instance's root filesystem the way you want. Don't forget that you probably want to run

to allow you to pull in the latest packages.

In our case we'll want to install

First, install the XFS tools:

These utilities allow you to format filesystems using XFS and to freeze and unfreeze the XFS filesystem. They are not necessary in order to read from XFS filesystems, but we want these programs installed on the AMI we create because they are used in the process of creating a consistent snapshot.

Next, create an EBS volume in the availability zone your instance is running in. I use a 10GB volume, but you can use any size and grow it later using this technique. This command is run on your local machine:

Wait until the

Back on the instance, format the volume with XFS:

3. Copy the contents of the instance's root filesystem to the EBS volume.

Here's the command to copy over the entire root filesystem, preserving soft-links, onto the mounted EBS volume - but ignoring the volume itself:

My command reports that it copied about 444 MB to the EBS volume.

4. Unmount the EBS volume, snapshot it, and register it as an AMI.

You're ready to create the AMI. On the instance do this:

sudo umount /vol

Now, back on your local machine, create the snapshot:

Once

5. Launch an instance of the new AMI.

Here comes the moment of truth. Launch an instance of the newly registered AMI:

Now, on the instance, you should be able to see that the root filesystem is XFS with the mount command. The output should contain:

Using

The technique presented below owes its success to the Canonical Ubuntu team, who created a kernel image that already contains XFS support. That's why these instructions use the official Canonical Ubuntu 9.10 Karmic Koala AMI - because it has XFS support built in. There may be other AKIs out there with XFS support built in - if so, the technique should work with them, too.

How to Do It

The general steps are as follows:

- Run an instance and set it up the way you like.

- Create an XFS-formatted EBS volume.

- Copy the contents of the instance's root filesystem to the EBS volume.

- Unmount the EBS volume, snapshot it, and register it as an AMI.

- Launch an instance of the new AMI.

1. Run an instance and set it up the way you like.

As mentioned above, I use the official Canonical Ubuntu 9.10 Karmic Koala AMI (currently ami-1515f67c for 32-bit architecture - see the table on Alestic.com for the most current Ubuntu AMI IDs).

ami=ami-1515f67c

security_groups=default

keypair=my-keypair

instance_type=m1.small

ec2-run-instances $ami -t $instance_type \

-g $security_groups -k $keypairec2-describe-instances command shows the instance is running and then ssh into it:ssh -i my-keypair ubuntu@ec2-1-2-3-4.amazonaws.comNow that you're in, set up the instance's root filesystem the way you want. Don't forget that you probably want to run

sudo apt-get updateto allow you to pull in the latest packages.

In our case we'll want to install

ec2-consistent-snapshot, as per Eric Hammond's article:2. Create an XFS-formatted EBS volume.codename=$(lsb_release -cs)

echo "deb http://ppa.launchpad.net/alestic/ppa/ubuntu \

$codename main" | \

sudo tee /etc/apt/sources.list.d/alestic-ppa.list

sudo apt-key adv --keyserver keyserver.ubuntu.com \

--recv-keys BE09C571

sudo apt-get update

sudo apt-get install -y ec2-consistent-snapshot

sudo PERL_MM_USE_DEFAULT=1 cpan Net::Amazon::EC2

First, install the XFS tools:

sudo apt-get install -y xfsprogsThese utilities allow you to format filesystems using XFS and to freeze and unfreeze the XFS filesystem. They are not necessary in order to read from XFS filesystems, but we want these programs installed on the AMI we create because they are used in the process of creating a consistent snapshot.

Next, create an EBS volume in the availability zone your instance is running in. I use a 10GB volume, but you can use any size and grow it later using this technique. This command is run on your local machine:

ec2-create-volume --size 10 -z $zoneWait until the

ec2-describe-volumes command shows the volume is available and then attach it to the instance:ec2-attach-volume $volume --instance $instance \

--device /dev/sdhBack on the instance, format the volume with XFS:

Now you should have an XFS-formatted EBS volume, ready for you to copy the contents of the instance's root filesystem.sudo mkfs.xfs /dev/sdh

sudo mkdir -m 000 /vol

sudo mount -t xfs /dev/sdh /vol

3. Copy the contents of the instance's root filesystem to the EBS volume.

Here's the command to copy over the entire root filesystem, preserving soft-links, onto the mounted EBS volume - but ignoring the volume itself:

sudo rsync -avx --exclude /vol / /volMy command reports that it copied about 444 MB to the EBS volume.

4. Unmount the EBS volume, snapshot it, and register it as an AMI.

You're ready to create the AMI. On the instance do this:

sudo umount /vol

Now, back on your local machine, create the snapshot:

ec2-create-snapshot $volumeOnce

ec2-describe-snapshots shows the snapshot is 100% complete, you can register it as an AMI. The AKI and ARI values used here should match the AKI and ARI that the instance is running - in this case, they are the default Canonical AKI and ARI for this AMI. Note that I give a descriptive "name" and "description" for the new AMI - this will make your life easier as the number of AMIs you create grows.This displays the newly registered AMI ID - let's say it'skernel=aki-5f15f636

ramdisk=ari-0915f660

description="Ubuntu 9.10 Karmic formatted with XFS"

ami_name=ubuntu-9.10-32-bit-ami-1515f67c-xfs

ec2-register --snapshot $snapshot --kernel $kernel \

--ramdisk $ramdisk '--description=$description' \

--name=$ami_name --architecture i386 \

--root-device-name /dev/sda1

ami-00000000.5. Launch an instance of the new AMI.

Here comes the moment of truth. Launch an instance of the newly registered AMI:

Again, wait untilami=ami-00000000

security_groups=default

keypair=my-keypair

instance_type=m1.small

ec2-run-instances $ami -t $instance_type \

-g $security_groups -k $keypair

ec2-describe-instances shows it is running and ssh into it:ssh -i my-keypair ubuntu@ec2-5-6-7-8.amazonaws.comNow, on the instance, you should be able to see that the root filesystem is XFS with the mount command. The output should contain:

/dev/sda1 on / type xfs (rw)

...ec2-describe-instances to determine the volume ID of the root volume for the instance.The command should display the snapshot ID of the snapshot that was created.sudo ec2-consistent-snapshot --aws-access-key-id \

$aws_access_key_id --aws-secret-access-key \

$aws_secret_access_key --xfs-filesystem / $volumeID

Using

ec2-consistent-snapshot and an XFS-formatted EBS AMI, you can create snapshots of the running instance without stopping it. Please comment below if you find this helpful, or with any other feedback.

Labels:

ami,

boot from ebs,

ebs,

elastic block store,

snapshot

Thursday, December 24, 2009

Use ELB to Serve Multiple SSL Domains on One EC2 Instance

This is one of the coolest uses of Amazon's ELB I've seen yet. Check out James Elwood's article.

You may know that you can't serve more than one SSL-enabled domain on a single EC2 instance. Okay, you can but only via a wildcard certificate (limited) or a multi-domain certificate (hard to maintain). So you really can't do it properly. Serving multiple SSL domains is one of the main use cases behind the popular request to support multiple IP addresses per instance.

Why can't you do it "normally"?

The reason why it doesn't work is this: The HTTPS protocol encrypts the HTTP request, including the Host: header within. This header identifies what actual domain is being requested - and therefore what SSL certificate to use to authenticate the request. But without knowing what domain is being requested, there's no way to choose the correct SSL certificate! So web servers can only use one SSL certificate.

If you have multiple IP addresses then you can serve different SSL domains from different IP addresses. The VirtualHost directive in Apache (or similar mechanisms in other web servers) can look at the target IP address in the TCP packets - not in the HTTP Host: header - to figure out which IP address is being requested, and therefore which domain's SSL certificate to use.

But without multiple IP addresses on an EC2 instance, you're stuck serving only a single SSL-enabled domain from each EC2 instance.

How can you?

Really, read James' article. He explains it very nicely.

How much does it cost?

Much less than two EC2 instances, that's for sure. According to the EC2 pricing charts, ELB costs:

Using the ELB-for-multiple-SSL-sites trick saves you 75% of the cost of using separate instances.

Thanks, James!

You may know that you can't serve more than one SSL-enabled domain on a single EC2 instance. Okay, you can but only via a wildcard certificate (limited) or a multi-domain certificate (hard to maintain). So you really can't do it properly. Serving multiple SSL domains is one of the main use cases behind the popular request to support multiple IP addresses per instance.

Why can't you do it "normally"?

The reason why it doesn't work is this: The HTTPS protocol encrypts the HTTP request, including the Host: header within. This header identifies what actual domain is being requested - and therefore what SSL certificate to use to authenticate the request. But without knowing what domain is being requested, there's no way to choose the correct SSL certificate! So web servers can only use one SSL certificate.

If you have multiple IP addresses then you can serve different SSL domains from different IP addresses. The VirtualHost directive in Apache (or similar mechanisms in other web servers) can look at the target IP address in the TCP packets - not in the HTTP Host: header - to figure out which IP address is being requested, and therefore which domain's SSL certificate to use.

But without multiple IP addresses on an EC2 instance, you're stuck serving only a single SSL-enabled domain from each EC2 instance.

How can you?

Really, read James' article. He explains it very nicely.

How much does it cost?

Much less than two EC2 instances, that's for sure. According to the EC2 pricing charts, ELB costs:

- $0.025 per Elastic Load Balancer-hour (or partial hour) ($0.028 in us-west-1 and eu-west-1)

- $0.008 per GB of data processed by an Elastic Load Balancer

Using the ELB-for-multiple-SSL-sites trick saves you 75% of the cost of using separate instances.

Thanks, James!

Labels:

aws,

ec2,

elastic load balancing,

elb,

ssl

Thursday, December 10, 2009

Read-After-Write Consistency in Amazon S3

S3 has an "eventual consistency" model, which presents certain limitations on how S3 can be used. Today, Amazon released an improvement called "read-after-write-consistency" in the EU and US-west regions (it's there, hidden at the bottom of the blog post). Here's an explanation of what this is, and why it's cool.

What is Eventual Consistency?

Consistency is a key concept in data storage: it describes when changes committed to a system are visible to all participants. Classic transactional databases employ various levels of consistency, but the golden standard is that after a transaction commits the changes are guaranteed to be visible to all participants. A change committed at millisecond 1 is guaranteed to be available to all views of the system - all queries - immediately thereafter.

Eventual consistency relaxes the rules a bit, allowing a time lag between the point the data is committed to storage and the point where it is visible to all others. A change committed at millisecond 1 might be visible to all immediately. It might not be visible to all until millisecond 500. It might not even be visible to all until millisecond 1000. But, eventually it will be visible to all clients. Eventual consistency is a key engineering tradeoff employed in building distributed systems.

One issue with eventual consistency is that there's no theoretical limit to how long you need to wait until all clients see the committed data. A delay must be employed (either explicitly or implicitly) to ensure the changes will be visible to all clients.

Practically speaking, I've observed that changes committed to S3 become visible to all within less than 2 seconds. If your distributed system reads data shortly after it was written to eventually consistent storage (such as S3) you'll experience higher latency as a result of the compensating delays.

What is Read-After-Write Consistency?

Read-after-write consistency tightens things up a bit, guaranteeing immediate visibility of new data to all clients. With read-after-write consistency, a newly created object or file or table row will immediately be visible, without any delays.

Note that read-after-write is not complete consistency: there's also read-after-update and read-after-delete. Read-after-update consistency would allow edits to an existing file or changes to an already-existing object or updates of an existing table row to be immediately visible to all clients. That's not the same thing as read-after-write, which is only for new data. Read-after-delete would guarantee that reading a deleted object or file or table row will fail for all clients, immediately. That, too, is different from read-after-write, which only relates to the creation of data.

Why is Read-After-Write Consistency Useful?

Read-after-write consistency allows you to build distributed systems with less latency. As touched on above, without read-after-write consistency you'll need to incorporate some kind of delay to ensure that the data you just wrote will be visible to the other parts of your system.

But no longer. If you use S3 in the US-west or EU regions, your systems need not wait for the data to become available.

Why Only in the AWS US-west and EU Regions?

Read-after-write consistency for AWS S3 is only available in the US-west and EU regions, not the US-Standard region. I asked Jeff Barr of AWS blogging fame why, and his answer makes a lot of sense:

If you use S3 and want to take advantage of the read-after-write consistency, make sure you understand the cost implications: the US-west and EU regions have higher storage and bandwidth costs than the US-Standard region.

Next Up: SQS Improvements?

Some vague theorizing:

It's been suggested that AWS Simple Queue Service leverages S3 under the hood. The improved S3 consistency model can be used to provide better consistency for SQS as well. Is this in the works? Jeff Barr, any comment? :-)

What is Eventual Consistency?

Consistency is a key concept in data storage: it describes when changes committed to a system are visible to all participants. Classic transactional databases employ various levels of consistency, but the golden standard is that after a transaction commits the changes are guaranteed to be visible to all participants. A change committed at millisecond 1 is guaranteed to be available to all views of the system - all queries - immediately thereafter.

Eventual consistency relaxes the rules a bit, allowing a time lag between the point the data is committed to storage and the point where it is visible to all others. A change committed at millisecond 1 might be visible to all immediately. It might not be visible to all until millisecond 500. It might not even be visible to all until millisecond 1000. But, eventually it will be visible to all clients. Eventual consistency is a key engineering tradeoff employed in building distributed systems.

One issue with eventual consistency is that there's no theoretical limit to how long you need to wait until all clients see the committed data. A delay must be employed (either explicitly or implicitly) to ensure the changes will be visible to all clients.

Practically speaking, I've observed that changes committed to S3 become visible to all within less than 2 seconds. If your distributed system reads data shortly after it was written to eventually consistent storage (such as S3) you'll experience higher latency as a result of the compensating delays.

What is Read-After-Write Consistency?

Read-after-write consistency tightens things up a bit, guaranteeing immediate visibility of new data to all clients. With read-after-write consistency, a newly created object or file or table row will immediately be visible, without any delays.

Note that read-after-write is not complete consistency: there's also read-after-update and read-after-delete. Read-after-update consistency would allow edits to an existing file or changes to an already-existing object or updates of an existing table row to be immediately visible to all clients. That's not the same thing as read-after-write, which is only for new data. Read-after-delete would guarantee that reading a deleted object or file or table row will fail for all clients, immediately. That, too, is different from read-after-write, which only relates to the creation of data.

Why is Read-After-Write Consistency Useful?

Read-after-write consistency allows you to build distributed systems with less latency. As touched on above, without read-after-write consistency you'll need to incorporate some kind of delay to ensure that the data you just wrote will be visible to the other parts of your system.

But no longer. If you use S3 in the US-west or EU regions, your systems need not wait for the data to become available.

Why Only in the AWS US-west and EU Regions?

Read-after-write consistency for AWS S3 is only available in the US-west and EU regions, not the US-Standard region. I asked Jeff Barr of AWS blogging fame why, and his answer makes a lot of sense:

This is a feature for EU and US-West. US Standard is bi-coastal and doesn't have read-after-write consistency.Aha! I had forgotten about the way Amazon defines its S3 regions. US-Standard has servers on both the east and west coasts (remember, this is S3 not EC2) in the same logical "region". The engineering challenges in providing read-after-write consistency in a smaller geographical area are greatly magnified when that area is expanded. The fundamental physical limitation is the speed of light, which takes at least 16 milliseconds to cross the US coast-to-coast (that's in a vacuum - it takes at least four times as long over the internet due to the latency introduced by routers and switches along the way).

If you use S3 and want to take advantage of the read-after-write consistency, make sure you understand the cost implications: the US-west and EU regions have higher storage and bandwidth costs than the US-Standard region.

Next Up: SQS Improvements?

Some vague theorizing:

It's been suggested that AWS Simple Queue Service leverages S3 under the hood. The improved S3 consistency model can be used to provide better consistency for SQS as well. Is this in the works? Jeff Barr, any comment? :-)

Labels:

aws,

consistency,

s3

Friday, December 4, 2009

The Open Cloud Computing Interface at IGT2009

Today I participated in the Cloud Standards & Interoperability panel at the IGT2009 conference, together with Shahar Evron of Zend Technologies, and moderated by Reuven Cohen. Reuven gave an overview of his involvement with various governments on the efforts to define and standardize "cloud", and Shahar presented an overview of the Zend Simple Cloud API (for PHP). I presented an overview of the Open Grid Forum's Open Cloud Computing Interface (OCCI).

The slides include a 20,000-foot view of the specification, a 5,000-foot view of the specification, and an eye-level view in which I illustrated the metadata travelling over the wire using the HTTP Header rendering.

Here's my presentation.

The slides include a 20,000-foot view of the specification, a 5,000-foot view of the specification, and an eye-level view in which I illustrated the metadata travelling over the wire using the HTTP Header rendering.

Here's my presentation.

Tuesday, November 17, 2009

How to Work with Contractors on AWS EC2 Projects

Recently I answered a question on the EC2 forums about how to give third parties access to EC2 instances. I noticed there's not a lot of info out there about how to work with contractors, consultants, or even internal groups to whom you want to grant access to your AWS account. Here's how.

First, a Caveat

Please be very selective when you choose a contractor. You want to make sure you choose a candidate who can actually do the work you need - and unfortunately, not everyone who advertises as such can really deliver the goods. Reuven Cohen's post about choosing a contractor/consultant for cloud projects examines six key factors to consider:

What's Your Skill Level?

The best way to allow a contractor access to your resources depends on your level of familiarity with the EC2 environment and with systems administration in general.

If you know your way around the EC2 toolset and you're comfortable managing SSH keypairs, then you probably already know how to allow third-party access safely. This article is not meant for you. (Sorry!)

If you don't know your way around the EC2 toolset, specifically the command-line API tools, and the AWS Management Console or the ElasticFox Firefox Extension, then you will be better off allowing the contractor to launch and configure the EC2 resources for you. The next section is for you.

Giving EC2 Access to a Third Party

[An aside: It sounds strange, doesn't it? "Third party". Did I miss two parties already? Was there beer? Really, though, it makes sense. A third party is someone who is not you (you're the first party) and not Amazon (they're the counterparty, or the second party). An outside contractor is a third party.]

Let's say you want a contractor to launch some EC2 instances for you and to set them up with specific software running on them. You also want them to set up automated EBS snapshots and other processes that will use the EC2 API.

What you should give the contractor

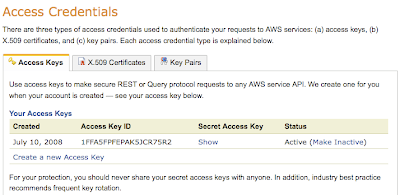

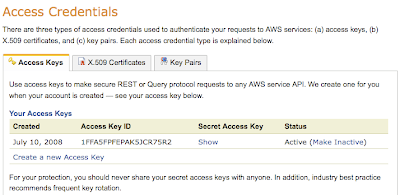

Give the contractor your Access Key ID and your Secret Access Key, which you should get from the Security Credentials page:

The Access Key ID is not a secret - but the Secret Access Key is, so make sure you transfer it securely. Don't send it over email! Use a private DropBox or other secure method.

Don't give out the email address and password that allows you to log into the AWS Management Console. You don't want anyone but you to be able to change the billing information or to sign you up for new services. Or to order merchandise from Amazon.com using your account (!).

What the contractor will do

Using ElasticFox and your Access Key ID and Secret Access Key the contractor will be able to launch EC2 instances and make all the necessary configuration changes on your account. Plus they'll be able to put these credentials in place for automated scripts to make EC2 API calls on your behalf - like to take an EBS snapshot. [There are some rare exceptions which will require your X.509 Certificates and the use of the command-line API tools.]

For example, here's what the contractor will do to set up a Linux instance:

What deliverables to expect from the contractor

When he's done, the contractor will give you a few things. These should include:

Plus, ask your contractor to set up the Security Groups so you will have the authorization you need to access your EC2 deployment from your location.

And, of course, before you release the contractor you should verify that everything works as expected.

What to do when the contractor's engagement is over

When your contractor no longer needs access to your EC2 account you should create new access key credentials (see the "Create a new Access Key" link on the Security Credentials page mentioned above).

But don't disable the old credentials just yet. First, update any code the contractor installed to use the new credentials and test it.

Once you're sure the new credentials are working, disable the credentials given to the contractor (the "Make Inactive" link).

The above guidelines also apply to working with internal groups within your organization. You might not need to revoke their credentials, depending on their role - but you should follow the suggestions above so you can if you need to.

First, a Caveat

Please be very selective when you choose a contractor. You want to make sure you choose a candidate who can actually do the work you need - and unfortunately, not everyone who advertises as such can really deliver the goods. Reuven Cohen's post about choosing a contractor/consultant for cloud projects examines six key factors to consider:

- Experience: experience solving real world problems is probably more important than anything else.

- Code: someone who can produce running code is often more useful than someone who just makes recommendations for others to follow.

- Community Engagement: discussion boards are a great way to gauge experience, and provide insight into the capabilities of the candidate.

- Blogs & Whitepaper: another good way to determine a candidate's insight and capabilities.

- Interview: ask the candidate questions to gauge their qualifications.

- References: do your homework and make sure the candidate really did what s/he claims to have done.

What's Your Skill Level?

The best way to allow a contractor access to your resources depends on your level of familiarity with the EC2 environment and with systems administration in general.

If you know your way around the EC2 toolset and you're comfortable managing SSH keypairs, then you probably already know how to allow third-party access safely. This article is not meant for you. (Sorry!)

If you don't know your way around the EC2 toolset, specifically the command-line API tools, and the AWS Management Console or the ElasticFox Firefox Extension, then you will be better off allowing the contractor to launch and configure the EC2 resources for you. The next section is for you.

Giving EC2 Access to a Third Party

[An aside: It sounds strange, doesn't it? "Third party". Did I miss two parties already? Was there beer? Really, though, it makes sense. A third party is someone who is not you (you're the first party) and not Amazon (they're the counterparty, or the second party). An outside contractor is a third party.]

Let's say you want a contractor to launch some EC2 instances for you and to set them up with specific software running on them. You also want them to set up automated EBS snapshots and other processes that will use the EC2 API.

What you should give the contractor

Give the contractor your Access Key ID and your Secret Access Key, which you should get from the Security Credentials page:

The Access Key ID is not a secret - but the Secret Access Key is, so make sure you transfer it securely. Don't send it over email! Use a private DropBox or other secure method.

Don't give out the email address and password that allows you to log into the AWS Management Console. You don't want anyone but you to be able to change the billing information or to sign you up for new services. Or to order merchandise from Amazon.com using your account (!).

What the contractor will do

Using ElasticFox and your Access Key ID and Secret Access Key the contractor will be able to launch EC2 instances and make all the necessary configuration changes on your account. Plus they'll be able to put these credentials in place for automated scripts to make EC2 API calls on your behalf - like to take an EBS snapshot. [There are some rare exceptions which will require your X.509 Certificates and the use of the command-line API tools.]

For example, here's what the contractor will do to set up a Linux instance:

- Install ElasticFox and put in your access credentials, allowing him access to your account.

- Set up a security group allowing him to access the instance.

- Create a keypair, saving the private key to his machine (and to give to you later).

- Choose an appropriate AMI from among the many available. (I recommend the Alestic Ubuntu AMIs).

- Launch an instance of the chosen AMI, in the security group, using the keypair.

- Once the instance is launched he'll SSH into the instance and set it up. He'll use the instance's public IP address and the private key half of the keypair (from step 3), and the user name (most likely "root") to do this.

What deliverables to expect from the contractor

When he's done, the contractor will give you a few things. These should include:

- the instance ids of the instances, their IP addresses, and a description of their roles.

- the names of any load balancers, auto scaling groups, etc. created.

- the private key he created in step 3 and the login name (usually "root"). Make sure you get this via a secure communications method - it allows privileged access to the instances.

Plus, ask your contractor to set up the Security Groups so you will have the authorization you need to access your EC2 deployment from your location.

And, of course, before you release the contractor you should verify that everything works as expected.

What to do when the contractor's engagement is over

When your contractor no longer needs access to your EC2 account you should create new access key credentials (see the "Create a new Access Key" link on the Security Credentials page mentioned above).

But don't disable the old credentials just yet. First, update any code the contractor installed to use the new credentials and test it.

Once you're sure the new credentials are working, disable the credentials given to the contractor (the "Make Inactive" link).

The above guidelines also apply to working with internal groups within your organization. You might not need to revoke their credentials, depending on their role - but you should follow the suggestions above so you can if you need to.

Labels:

aws,

consulting,

contractor,

credentials,

ec2

Tuesday, October 27, 2009

What Language Does the Cloud Speak, Now and In the Future?

You're a developer writing applications that use the cloud. Your code manipulates cloud resources, creating and destroying VMs, defining storage and networking, and gluing these resources together to create the infrastructure upon which your application runs. You use an API to perform these cloud operations - and this API is specific to the programming language and to the cloud provider you're using: for example, for Java EC2 applications you'd use typica, for Python EC2 applications you'd use boto, etc. But what's happening under the hood, when you call these APIs? How do these libraries communicate with the cloud? What language does the cloud speak?

I'll explore this question for today's cloud, and touch upon what the future holds for cloud APIs.

Java? Python? Perl? PHP? Ruby? .NET?

It's tempting to say that the cloud speaks the same programming language whose API you're using. Don't be fooled: it doesn't.

"Wait," you say. "All these languages have Remote Procedure Call (RPC) mechanisms. Doesn't the cloud use them?"

No. The reason why RPCs are not provided for every language is simple: would you want to support a product that needed to understand the RPC mechanism of many languages? Would you want to add support for another RPC mechanism as a new language becomes popular?

No? Neither do cloud providers.

So they use HTTP.

HTTP: It's a Protocol

The cloud speaks HTTP. HTTP is a protocol: it prescribes a specific on-the-wire representation for the traffic. Commands are sent to the cloud and results returned using the internet's most ubiquitous protocol, spoken by every browser and web server, routable by all routers, bridgeable by all bridges, and securable by any number of different methods (HTTP + SSL/TLS being the most popular, a.k.a. HTTPS). RPC mechanisms cannot provide all these benefits.

Cloud APIs all use HTTP under the hood. EC2 actually has two different ways of using HTTP: the SOAP API and the Query API. SOAP uses XML wrappers in the body of the HTTP request and response. The Query API places all the parameters into the URL itself and returns the raw XML in the response.

So, the lingua franca of the cloud is HTTP.

But EC2's use of HTTP to transport the SOAP API and the Query API is not the only way to use HTTP.

HTTP: It's an API

HTTP itself can be used as a rudimentary API. HTTP has methods (GET, PUT, POST, DELETE) and return codes and conventions for passing arguments to the invoked method. While SOAP wraps method calls in XML, and Query APIs wrap method calls in the URL (e.g.

Using raw HTTP methods we can model a simple API as follows:

RESTful APIs are "close to the metal" - they do not require a higher-level object model in order to be usable by servers or clients, because bare HTTP constructs are used.

Unfortunately, EC2's APIs are not RESTful. Amazon was the undisputed leader in bringing cloud to the masses, and its cloud API was built before RESTful principles were popular and well understood.

Why Should the Cloud Speak RESTful HTTP?

Many benefits can be gained by having the cloud speak RESTful HTTP. For example:

Where are Cloud API Standards Headed?

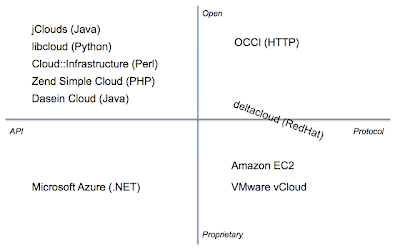

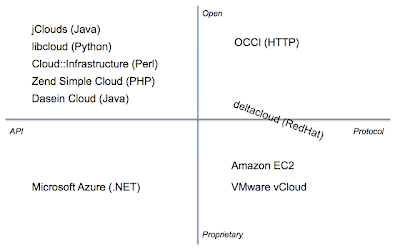

There are many cloud API standardization efforts. Some groups are creating open standards, involving all industry stakeholders and allowing you (the developer) to use them or implement them without fear of infringing on any IP. Some of them are not open, where those guarantees cannot be made. Some are language-specific APIs, and others are HTTP-based APIs (RESTful or not).

The following are some popular cloud APIs:

jClouds

libcloud

Cloud::Infrastructure

Zend Simple Cloud API

Dasein Cloud API

Open Cloud Computing Interface (OCCI)

Microsoft Azure

Amazon EC2

VMware vCloud

deltacloud

Here's how the above products (APIs) compare, based on these criteria:

Open: The specification is available for anyone to implement without licensing IP, and the API was designed in a process open to the public.

Proprietary: The specification is either IP encumbered or the specification was developed without the free involvement of all ecosystem participants (providers, ISVs, SIs, developers, end-users).

API: The standard defines an API requiring a programming language to operate.

Protocol: The standard defines a protocol - HTTP.

This chart shows the following:

What Does This Mean for the Cloud Developer?

The future of the cloud will have a single protocol that can be used to operate multiple providers. Libraries will still exist for every language, and they will be able to control any standards-compliant cloud. In this world, a RESTful API based on HTTP is a highly attractive option.

I highly recommend taking a look at the work being done in OCCI, an open standard that reflects the needs of the entire ecosystem. It'll be in your future.

Update 27 October 2009:

Further Reading

No mention of cloud APIs would be complete without reference to William Vambenepe's articles on the subject:

I'll explore this question for today's cloud, and touch upon what the future holds for cloud APIs.

Java? Python? Perl? PHP? Ruby? .NET?

It's tempting to say that the cloud speaks the same programming language whose API you're using. Don't be fooled: it doesn't.

"Wait," you say. "All these languages have Remote Procedure Call (RPC) mechanisms. Doesn't the cloud use them?"

No. The reason why RPCs are not provided for every language is simple: would you want to support a product that needed to understand the RPC mechanism of many languages? Would you want to add support for another RPC mechanism as a new language becomes popular?

No? Neither do cloud providers.

So they use HTTP.

HTTP: It's a Protocol

The cloud speaks HTTP. HTTP is a protocol: it prescribes a specific on-the-wire representation for the traffic. Commands are sent to the cloud and results returned using the internet's most ubiquitous protocol, spoken by every browser and web server, routable by all routers, bridgeable by all bridges, and securable by any number of different methods (HTTP + SSL/TLS being the most popular, a.k.a. HTTPS). RPC mechanisms cannot provide all these benefits.

Cloud APIs all use HTTP under the hood. EC2 actually has two different ways of using HTTP: the SOAP API and the Query API. SOAP uses XML wrappers in the body of the HTTP request and response. The Query API places all the parameters into the URL itself and returns the raw XML in the response.

So, the lingua franca of the cloud is HTTP.

But EC2's use of HTTP to transport the SOAP API and the Query API is not the only way to use HTTP.

HTTP: It's an API

HTTP itself can be used as a rudimentary API. HTTP has methods (GET, PUT, POST, DELETE) and return codes and conventions for passing arguments to the invoked method. While SOAP wraps method calls in XML, and Query APIs wrap method calls in the URL (e.g.

http://ec2.amazonaws.com/?Action=DescribeRegions), HTTP itself can be used to encode those same operations. For example:GET /regions HTTP/1.1

Host: cloud.example.com

Accept: */*Using raw HTTP methods we can model a simple API as follows:

- HTTP GET is used as a "getter" method.

- HTTP PUT and POST are used as "setter" or "constructor" methods.

- HTTP DELETE is used to delete resources.

RESTful APIs are "close to the metal" - they do not require a higher-level object model in order to be usable by servers or clients, because bare HTTP constructs are used.

Unfortunately, EC2's APIs are not RESTful. Amazon was the undisputed leader in bringing cloud to the masses, and its cloud API was built before RESTful principles were popular and well understood.

Why Should the Cloud Speak RESTful HTTP?

Many benefits can be gained by having the cloud speak RESTful HTTP. For example:

- The cloud can be operated directly from the command-line, using curl, without any language libraries needed.

- Operations require less parsing and higher-level modeling because they are represented close to the "native" HTTP layer.

- Cache control, hashing and conditional retrieval, alternate representations of the same resource, etc., can be easily provided via the usual HTTP headers. No special coding is required.

- Anything that can run a web server can be a cloud. Your embedded device can easily advertise itself as a cloud and make its processing power available for use via a lightweight HTTP server.

Where are Cloud API Standards Headed?

There are many cloud API standardization efforts. Some groups are creating open standards, involving all industry stakeholders and allowing you (the developer) to use them or implement them without fear of infringing on any IP. Some of them are not open, where those guarantees cannot be made. Some are language-specific APIs, and others are HTTP-based APIs (RESTful or not).

The following are some popular cloud APIs:

jClouds

libcloud

Cloud::Infrastructure

Zend Simple Cloud API

Dasein Cloud API

Open Cloud Computing Interface (OCCI)

Microsoft Azure

Amazon EC2

VMware vCloud

deltacloud

Here's how the above products (APIs) compare, based on these criteria:

Open: The specification is available for anyone to implement without licensing IP, and the API was designed in a process open to the public.

Proprietary: The specification is either IP encumbered or the specification was developed without the free involvement of all ecosystem participants (providers, ISVs, SIs, developers, end-users).

API: The standard defines an API requiring a programming language to operate.

Protocol: The standard defines a protocol - HTTP.

This chart shows the following:

- There are many language-specific APIs, most open-source.

- Proprietary standards are the dominant players in the marketplace today.

- OCCI is the only completely open standard defining a protocol.

- Deltacloud was begun by RedHat and is currently open, but its initial development was closed and did not involve players from across the ecosystem (hence its location on the border between Open and Proprietary).

What Does This Mean for the Cloud Developer?

The future of the cloud will have a single protocol that can be used to operate multiple providers. Libraries will still exist for every language, and they will be able to control any standards-compliant cloud. In this world, a RESTful API based on HTTP is a highly attractive option.

I highly recommend taking a look at the work being done in OCCI, an open standard that reflects the needs of the entire ecosystem. It'll be in your future.

Update 27 October 2009:

Further Reading

No mention of cloud APIs would be complete without reference to William Vambenepe's articles on the subject:

Labels:

cloud computing,

developer,

occi,

standards

Subscribe to:

Comments (Atom)